ASIP eUpdate, January 2017

Welcome to the latest issue of the ASIP eUpdate Newsletter, our bi-annual publication to keep you informed on technical topics related to application-specific instruction-set processor (ASIP) design. In the previous two newsletters, we covered some example models that are provided with ASIP Designer™. Judging from your feedback, this topic seems to be of high interest, so we decided to give even more room to it this time. The other main topic is an update on the 2016.09 release of ASIP Designer. We hope you enjoy today’s issue, and we look forward to your feedback and suggestions.

ASIPs are a proven solution for domain-specific/application-optimized processors, plus often serve as a more flexible yet equally efficient alternative to fixed RTL implementations, often referred to as programmable accelerators. ASIPs come with an architecture and instruction set tuned for a specific application domain. They rely on techniques similar to those used in the design of hardware accelerators to reach high performance and low power: heavy use of parallelism and specialized datapath elements. Yet ASIPs retain software programmability within their application domain, resulting in C/C++ programmable processors and accelerators with the lowest power possible.

What's New in ASIP Designer?

The September release of ASIP Designer (2016.09) is available.

The June Newsletter previewed the LLVM-based C/C++ compiler frontend capabilities in ASIP Designer; these are now fully released. To recap, for ASIP Designer we have extended the LLVM compiler infrastructure to support the full architectural freedom needed for the design of highly specialized and differentiated processor architectures. Extensions include:

- Instantaneous adaption of the compiler to the ASIP Designer processor model (compiler-in-the-loop™).

- Increased flexibility in data types, supporting non-power-of-two C built-in types. For example, char, short, long, and long long can have a width that is not a power of two.

- Exploitation of all application types provided in the ASIP Designer processor model, including Vector (SIMD) data types and user-defined (custom) data types.

- Multiple address spaces. The multiple memories defined in nML are accessible in the LLVM flow, using the same memory specifiers. The different address spaces can be associated with different pointer widths and different addressable unit sizes. So, data memories must no longer be byte addressable.

- Native Pointer Support. The ASIP Designer processor model may implement pointer arithmetic differently than integer arithmetic. For example, pointers can have a different width and can be mapped to dedicated address generation units and corresponding registers.

- The LLVM flow automatically fully exploits the underlying processor model, involving optimizations like selection of zero-overhead loop instructions and aggressive software pipelining.

Additional updates in the latest release include:

- ASIP Designer comes with extensive C and C++ library support providing both a full-featured stack as well as a lightweight stack. The lightweight stack is tailored to the needs of embedded designs and results in significant savings on both cycle-count and instruction words.

- Software pipelining was further enhanced by a new modulo scheduling algorithm.

- Compilation times have been reduced by 25% - 50%.

- Just-in-Time (JIT) compilation support is now also available in instruction-accurate simulation mode. This allows for the same use model for both instruction-accurate (IA) and cycle-accurate (CA) simulations. Both IA and CA simulator are generated from the same, single nML model. There is no need to maintain two separate models. The automatic TLM2 interface generation is now also fully aligned for CA and IA simulation models.

- Generation of SystemVerilog classes to enable random instruction generation using the UVM methodology.

- The RISC-V ISA example model was officially released, available to all licensees of ASIP Designer.

There are many more enhancements that came with the 2016.09 release. For more information contact: [email protected]. Also be sure to check out the updated and extended ASIP Designer data sheet

to get an overview of the features and capabilities of ASIP Designer.

Example Processor Models: Instruction Level Parallelism

In our December 2015 issue we gave an overview of the example models available with ASIP Designer. Designers can choose from this extensive library of example ASIP models, which are provided as nML source code. These models are an excellent reference to learn how to model certain processor functionalities in nML, to explain how the compiler can take advantage of the architectural specialization, and how to leverage the generated SDK. In combination with ASIP Designer, these models can be used as a starting point for architectural exploration, and customer-specific production designs. In the June 2016 Newsletter, we focused on adding data-level parallelism (or SIMD) to processor architectures. In this update, we will consider adding instruction-level parallelism (ILP). For this, we will have a closer look at two example models, Tdsp and Tvliw.

Instruction-level parallelism is one of the three main features that enable efficient application specific architectures (next to SIMD and architecture specialization). Instruction level parallelism means that there is an instruction set that encodes the possibilities with which the architecture can execute operations in parallel. It is then up to the C compiler to generate these instructions that exploit parallelization, at compile time. This is often a superior approach compared to superscalar architectures, where complex and costly hardware blocks are needed to do a parallel dispatch of instructions at runtime. It, of course, requires a very sophisticated compiler.

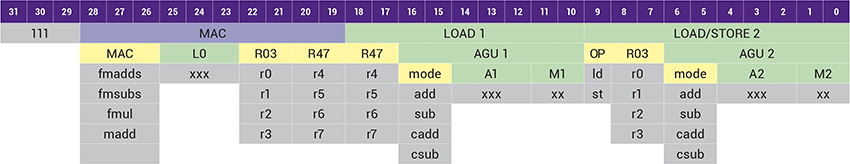

Tdsp example. Tdsp is a 16/32 bit DSP processor, featuring multiply-and-accumulate, fractional data types, dual-port memory access, circular addressing and zero overhead hardware loops. The Tdsp instruction set allows a multiply-accumulate operation to be executed in parallel with two memory accesses, and in parallel with the memory addresses update. Note that these five parallel operations are controlled by a relatively short 32-bit instruction word, shown in the following table.

The compact instruction format is possible by carefully selecting the most important opcodes for the parallel format and by restricting the registers that can be used.

- The MAC field supports six different operations, and the multiplier inputs are limited to register R0 – R3 and R4 – R7 for each of the respective operands.

- The LOAD 1 field supports load operations into data registers R4 – R7. The addresses and address modifier values are stored in register files A1 and M1, which are dedicated to address generation unit (AGU) 1.

- The LOAD/STORE 2 field supports load and store operations on data registers R0 – R3. The second AGU has its own set of registers A2 and M2.

In addition to this five-way parallel format, Tdsp has other instruction formats which support a richer set of opcodes, and a more general register access scheme. In addition to the 32-bit format, there are also 16 bit instructions. Short and long instructions can be mixed arbitrarily. This allows for the compiler to generate parallel code whenever this is possible, but also to generate more compact code in places where the software does not apply for any parallelism.

Tvliw example. Tvliw, like the Tvec example that we presented in our June issue, is a family of example models that feature various aspects related to very long instruction word (VLIW) architectures. As its main feature, Tvliw has four parallel slots: two slots that control the two identical ALUs, and two slots that enable data moves between registers and the data memory. Other features are:

- Instruction compaction: In real-life code, there will be many instructions that enable fewer than four parallel operations. The slots that are not utilized will execute a NOP. Encoding these NOPs in program memory is wasteful. The remedy is to add shorter instruction formats to the instruction set, that enable fewer parallel operations. By generating these short instructions, the compiler can optimize for code density as well as for cycle performance.

- Avoiding stalls: When the code jumps to a wide instruction that is not properly aligned in program memory, the fetch of that instruction will take two cycles, which will result in a pipeline stall. These stalls can be avoided if the compiler can position wide instructions at aligned addresses. Two alternative techniques are supported to achieve the correct alignment: instruction elongation and instruction truncation. Both are demonstrated in the Tvliw model.

- Predication: Predicated execution is a mechanism to implement small if-statements that contain only a few operations. It avoids the pipeline hazards related to jump instructions. In addition, predicated operations can be scheduled in parallel with other operations, while for a jump-based implementation of conditions, parallel execution is much more limited. Using Tvliw, each of the four slots can be predicated independently of the other slots.

Compiler Techniques for Instruction-Level Parallelism. Generating efficient code for parallel architectures requires a compiler that it is equipped with very powerful algorithms for code scheduling and optimization. A few aspects to highlight, all supported by ASIP Designer:

- Data flow and alias analysis. The input to the compiler is C or C++ code, which by definition is sequential. Data flow analysis and alias analysis techniques extract a maximally parallel intermediate representation from the C/C++ code. This is a crucial pass that enables optimized instruction scheduling.

- Register assignment While standard RISC architectures typically assume a central register file, this is not a suitable solution for parallel architectures, as a full connection of all registers to the various functional units would result in significant multiplexer area overhead. Therefore, parallel architectures typically come with a distributed register configuration. It is then important to have a compiler that can optimize the assignment to the various register files.

- Software pipelining. In order to maximally exploit instruction level parallelism, the schedules generated for loop bodies must be software pipelined. In ASIP Designer, the compiler combines well proven iterative loop folding algorithms and state of the art modulo scheduling techniques.

Contact [email protected] for more information.

More Information

For more details on these models or any other models, click on the ‘contact us’ button, or email [email protected].

Events

Please save the date for our upcoming ASIP Designer Workshop on Thursday March 16th, 2017:

- ASIP Designer Workshop: Please join us at Synopsys Headquarters in Mountain View, CA to learn how to automate the design, verification, and programming of ASIPs. Registration opens on February 13, 2017.

White Papers

The following whitepapers are available for download

Subscribe

Interested in regular updates on ASIP news and products? Sign up for our bi-annual newsletter!